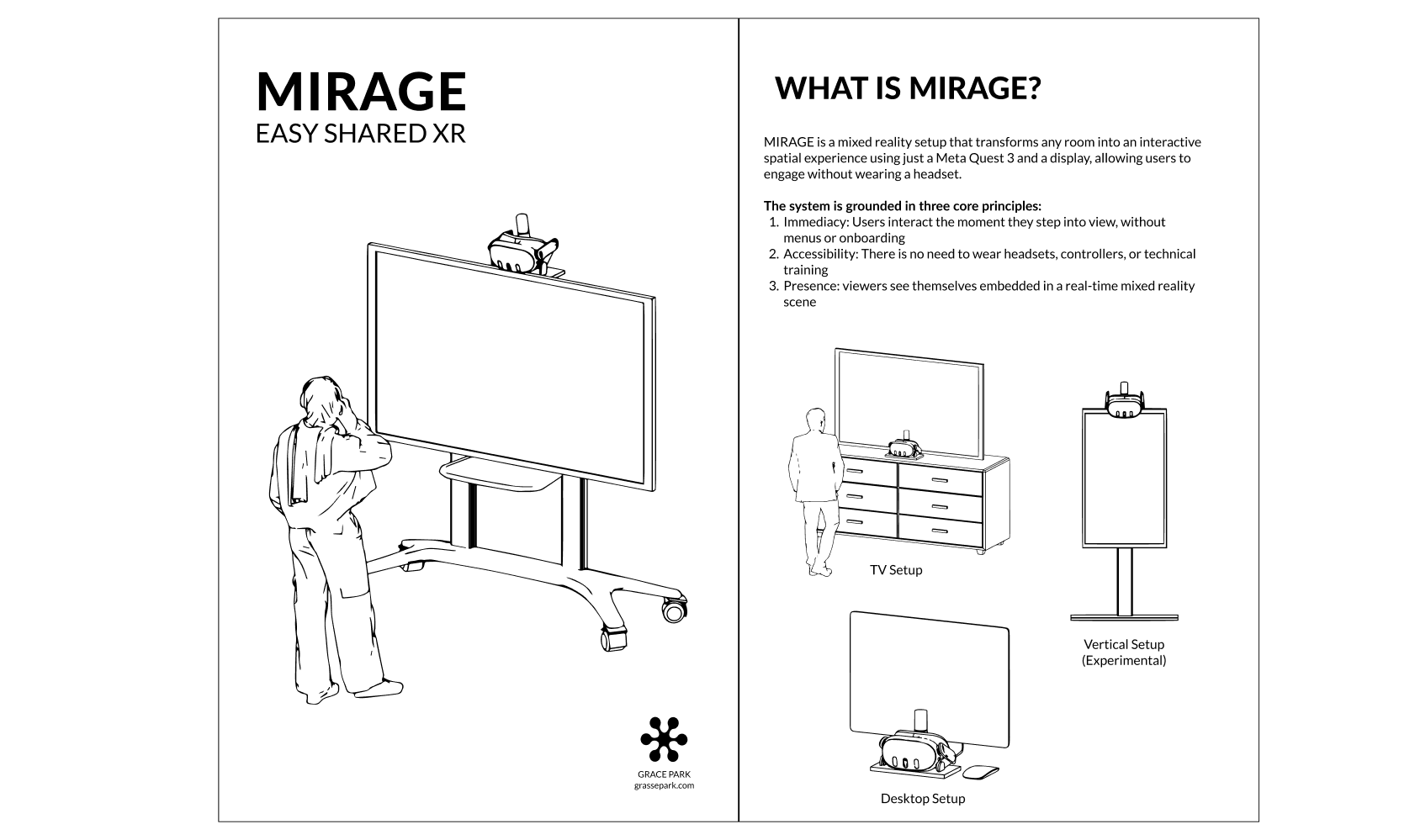

MIRAGE is a mixed reality setup that uses a Meta Quest 3 and an external display to create a mirror-like spatial experience without requiring users to wear a headset. Designed to be intuitive, shared, and self-contained, it brings immersive content to anyone in the room.

1. AI Design, no candy wrapper

Many AI products take a shortcut by wrapping APIs like OpenAI or Gemini into simple chatbot interfaces, often resulting in generic experiences. With Mira, I aimed for a more deliberate approach. To achieve the behaviors I wanted while being mindful of energy consumption, Mira leveraged Retrieval-Augmented Generation (RAG) for context-aware responses and used Qwen locally as a fallback, reducing reliance on cloud-based APIs.

2. Building products with a “hacker” mentality

As this was my last project at Parsons, I wanted to create a project that did not have the pressure of becoming a real product, but rather a project that showed my thinking process and hope for the future. Much like a hackathon, I prototyped new systems quickly, up to the last 48 hours!

How can we enable shared mixed reality experiences without the constraints of headsets?

I aimed to redesign the Quest 3 experience to be more immediate, accessible, and give a sense of presence/smooth embodiment.

Before my final year of Parsons even began, I had a slew of bad XR experiences.

From attending exhibitions to eventually leading them, I consistently found headsets to be the biggest barrier to a positive user experience, especially for users like myself.

At the 2024 Design and Technology Thesis Show, the space was so hot I couldn’t bring myself to wear a headset at all. XR exhibitions I managed often required lengthy onboarding, extensive training, and significant resources for sanitization and technical troubleshooting. Even personally, the Quest 3 gave me a headache just 15 minutes into use- ultimately leading me to purchase a $60 counterweighted headstrap just to make it tolerable.

Prototyping

The first semester was dedicated to research, studying precedents and formats. From experimenting with a projector, an ultraleap, and custom headset fixtures, I landed on a voice and ultraleap setup. The Ultraleap was later scrapped to use the Camera API so that users could be farther from the headset.

The MIRAGE Setup

The final setup is designed to be experienced in a multitude of ways, whether you're interacting through a headset, observing from the physical mirrored display, or engaging with the ambient audio and spatial elements in the room.

To guide users and exhibitors through the modular, mixed reality installation, I created an IKEA-style instruction booklet that visually and playfully breaks down how to assemble, navigate, and present Mirage in any environment.This booklet reflects the approachable tone of the experience and serves as a standalone artifact that blends physical design with spatial computing, making Mirage accessible even to those unfamiliar with XR.

As the first example of the MIRAGE setup, I only found it fitting to do an exhibition guide that would answer questions about the thesis show, explore the thesis show, and interact with guests.

Mira, the Exhibition Guide

Mira showing a diagram of the space

Mira taking a photo

MIRAGE is a setup that I’d like to continue to work on as a medium for future prototypers to work with.

Some future features include:

1. Public Github repo with easy to work with components

2. Optimized Hand, Body, and Person tracking

3. A build of Mira for users to try and take home

Thank you!

I'm deeply grateful to my thesis advisors, Colleen Macklin and Brad MacDonald, for believing in this idea even more than I did, and for pushing me to turn it into the prototype it is today. My time at Parsons has been invaluable in shaping how I approach design challenges with thoughtfulness and critical perspective.